Yale Engineering faculty pave new paths in quantum exploration

This story originally appeared in Yale Engineering magazine.

In classical computing, information comes in the form of bits corresponding to ones or zeros. In quantum computing, information is stored in special devices with quantum properties that are known as quantum bits, or “qubits.”

The qubits can each represent a one or a zero, or — confoundingly — both one and zero at the same time. This “quantum parallelism” is one of the properties that enables quantum computers to make calculations that will potentially be orders of magnitude faster than what is possible on classical supercomputers, which will in turn transform multiple industries and revolutionize everyday life.

But achieving even one stable qubit is tricky. Even trickier is getting enough to build an error-free, truly useful quantum computer. The consensus among those in the field is that we’re some ways off from that point, but progress is being made. At Yale Engineering, several faculty in Computer Science, Electrical Engineering, and Applied Physics are working to pave the way toward practical quantum computing and preparing for when it arrives. Among them are Katerina Sotiraki, Hong Tang, and Shruti Puri — here’s a look at some of the work they’re doing:

Katerina Sotiraki

In recent years, consumers of technology news may have come across ominous headlines warning us of the imminent “Q-Day.” In theory, that’s when quantum computing has advanced to a level that it can easily dismantle even the most complex and sophisticated computer security systems currently in place. Encryption keys that today’s classical computers require trillions of years to solve, for instance, could be broken by a fully powered quantum computer in mere seconds.

In recent years, consumers of technology news may have come across ominous headlines warning us of the imminent “Q-Day.” In theory, that’s when quantum computing has advanced to a level that it can easily dismantle even the most complex and sophisticated computer security systems currently in place. Encryption keys that today’s classical computers require trillions of years to solve, for instance, could be broken by a fully powered quantum computer in mere seconds.

Katerina Sotiraki, one of the most recent additions to the Computer Science Department, is working to ensure that Q-Day never arrives.

Thanks to computer cryptography, we’re able to use digital technology without fear of getting hacked. And over the last few decades, cryptography has made a great deal of progress over the last few decades, as the field has developed many powerful protocols with provable security such as public-key encryption schemes and signature schemes.

The arrival of quantum computing throws a wrench into all of this work. Once quantum computing has advanced to a certain degree, and an attacker has access to a quantum computer, today’s cryptographic protocols will be at risk.

Preparing for that eventuality, Sotiraki’s research uses tools from mathematics and theoretical computer science to build cryptographic protocols that could hold up against quantum attacks. This includes understanding the complexity of widely used cryptographic assumptions — that is, the problems designed to foil hackers — and constructing security protocols that can defend against future and more advanced quantum computers.

“One of the main approaches is that we try to build new protocols based on assumptions that we believe will resist quantum attacks,” she said.

Current security systems tend to be based on computing prime factors or discrete logarithms, she said.

“Theoretically, if we had a very powerful quantum computer, we could break these assumptions,” she said. “So for my work, I use different types of assumptions — specific assumptions that we believe will keep us quantum-secure in the sense that people have tried to break them using quantum algorithms and they have not succeeded.”

Specifically, Sotiraki is working on what’s known as lattice cryptography, a concept based on multidimensional grids. Because it’s extremely difficult to find information embedded within multiple spatial dimensions of a lattice, it could be key to developing a system that can withstand attacks from both classical and quantum computers.

But there’s still work to be done.

“One challenge right now is that these assumptions are not as efficient as the protocols that we use right now,” she said. “Which makes sense — for the protocols that we use right now, there has been a lot of time and people have really optimized them. And for the new protocols, we are just starting.”

But even in a worst-case scenario, experts believe we’re years if not decades away from a possible Q-Day.

“My understanding is that we are a long way from having quantum computers that would break the current assumptions, which is a good thing, because it allows us to have some time for this transition,” she said.

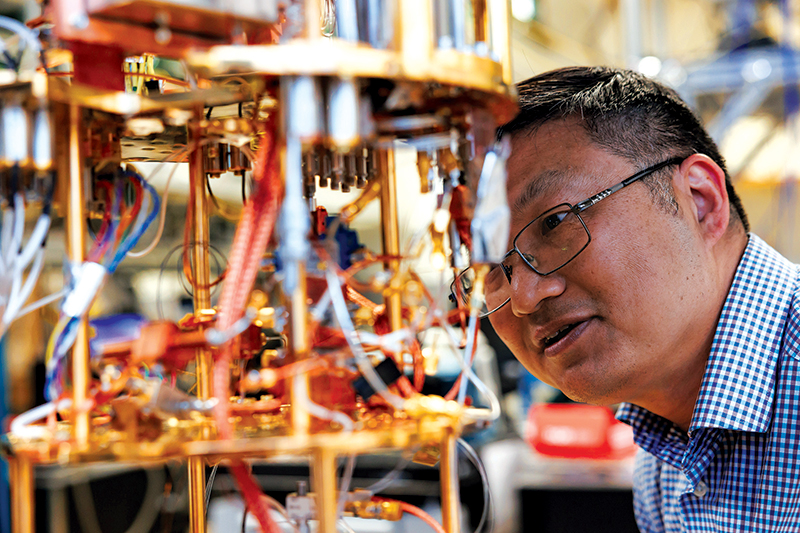

Hong Tang

The research of Hong Tang, the Llewellyn West Jones, Jr. Professor of Electrical Engineering, Applied Physics and Physics, has involved numerous projects that could lead to advances in quantum computing. Recently, his lab developed the first chip-scale titanium-doped sapphire laser, which could go a long way toward making a critical technology much more accessible to researchers.

The research of Hong Tang, the Llewellyn West Jones, Jr. Professor of Electrical Engineering, Applied Physics and Physics, has involved numerous projects that could lead to advances in quantum computing. Recently, his lab developed the first chip-scale titanium-doped sapphire laser, which could go a long way toward making a critical technology much more accessible to researchers.

Combining the efficient performance of titanium-sapphire lasers with the small size of a chip, it could boost the development of quantum computing chips as well as atomic clocks, portable sensors, and other applications.

When the titanium-doped sapphire laser was introduced in the 1980s, it was a major advance in the field of lasers. Key to its success was the material used as its gain medium — that is, the material that amplifies the laser’s energy. Sapphire doped with titanium ions proved to be particularly powerful, providing a much wider laser emission bandwidth than conventional semiconductor lasers. The innovation led to fundamental discoveries and countless applications in physics, biology, and chemistry.

The table-top titanium-sapphire laser is a must-have for many academic and industrial labs. But these lasers are costly and take up a lot of space, which means you rarely see them outside of a well-resourced laboratory. Tang’s chip-scale laser, which was headed up by Yubo Wang, a graduate student in Tang’s lab, could help change that.

“Without becoming more widely accessible, these devices will remain a niche item, limiting the potential breakthroughs that they could help produce,” Tang said.

Just weeks before the results of that project were published, Tang’s lab also unveiled the first on-chip device that can detect up to 100 photons at a time.

With very high sensitivity, photon detectors can detect the number of photons even in an extremely weak light pulse, and are essential to many quantum technologies, including quantum computing, quantum cryptography and remote sensing. However, current photon-counting devices are limited in how many photons they can detect at once—usually only one at a time, and not more than 10. By increasing the capability by up to 100, the Tang lab’s device allows for a broader range of quantum technology applications. The project was led by Risheng Cheng, and Yiyu Zhou, both postdoctoral associates in Tang’s lab at the time.

Not only does Tang’s device detect more photons, but the rate at which it counts them is also much faster. Plus, it operates at an easily accessible temperature.

Because of this, the device allows for a broader range of applications. This is true, Tang said, “especially in lots of fast-emerging quantum applications, such as large-scale Boson sampling, photonic quantum computing, and quantum metrology.”

The complexity of the device required years of design and fabrication, in addition to the time it took to verify its performance. And they’re not done yet. Tang and his team plan to make the device smaller and increase the number of photons it can detect — possibly up to more than 1,000.

Shruti Puri

When she entered the field of quantum research, Shruti Puri was surprised by the division between those working on system architecture and those working on hardware. Because they were working separately, it meant that assumptions had to be made about what kind of errors could potentially happen — assumptions that aren’t always accurate. As a result, systems are often designed to protect against a broad range of potential errors — many of which very likely will never happen – leading to an inefficient use of the system’s resources.

When she entered the field of quantum research, Shruti Puri was surprised by the division between those working on system architecture and those working on hardware. Because they were working separately, it meant that assumptions had to be made about what kind of errors could potentially happen — assumptions that aren’t always accurate. As a result, systems are often designed to protect against a broad range of potential errors — many of which very likely will never happen – leading to an inefficient use of the system’s resources.

Puri’s research is at the intersection of quantum optics — that is, how light interacts with matter — and quantum information theory. It’s a background that gives her a good understanding of the hardware physics and the actual noise in the system. And that provides a clearer picture of potential errors to anticipate and correct.

Because they’re so incredibly small, qubits — fragile bits of quantum information — are particularly susceptible to any perturbations in their environment. Electromagnetic fields, pressure changes and other disturbances — referred to as noise — can destroy the phenomena that make quantum computing so potentially powerful.

“The errors are quite large in the system, so you need to really think about how to optimize your error-correction code more than you do in the classical systems,” said Puri, assistant professor of applied physics.

The eventual successful application of quantum technology, she said, will require efficient and active quantum error correction to protect the fragile quantum states. Puri is working toward this goal with a combination of robust quantum circuit engineering, tailoring error correction codes for specific noise models, and engineering qubits with inherent noise protection.

“For a long time, people would usually design codes to correct for noise in a quantum system, just assuming a general kind of noise model to describe the system,” she said. “But because I was coming from more of a hardware physics background, this was just bizarre to me. How can you make codes to correct noise when you don’t understand the noise that’s particularly affecting the hardware?”

Puri designs hardware in such a way that the noise is of a very specific nature, and easier to correct. Once the hardware has been tailored, she can design much more effective error-correcting codes. In 2020, Puri and her research group published a paper introducing a specific quantum element that enabled the realization of a tailored error-correcting code, which could perform much better than a general code. That helped revive interest within the field in the idea of tailoring a system for more effective error correction.

“Since then, we have identified other sorts of physical hardware where you can design other types of noise and then develop how to do error correction for that specific tailored noise,” she said.